Embracing Cognitive Design in the Age of Ambient Computing

It was early in the year 2000 and I found myself standing on what I thought to be the threshold of a digital revolution. As luck would have it, I was wrapping up some work on some TV show websites at NBC Internet, and a role in our ambitious new venture, the Broadband unit, opened up for me. It was a small diverse team of people who I looked up to as being visionaries from Apple and Netscape. Our mission? To push the boundaries of what was possible and then lay the groundwork for what we thought was the future of computing.

While our primary focus was on bringing the promise of rich media content to those with DSL Broadband connections, we also experimented with some skunkworks projects using our parent company at the time, GE’s, experimental broadband spectrum. This was all centered around what we were calling “Seamless Living.”

This included seamless transitions of phone calls between your car mobile and home landlines, continuous music feedback that follows you from your home to your car and beyond, and some early concepts of location-based services and context-based computing.

We were sketching a vision of a world where we thought technology was going to be integrated seamlessly into our daily lives. This was long before most people could even conceive carrying a computer in their pocket, yet as first movers in the market, we found that the world just wasn’t ready for it yet. And it would take over twenty years for the industry to bring some of our ideas to life.

From Concept to Reality

Fast forward to 2023. At my former company, Momentum Design Lab, Peter McNulty, our Head of Experience Design, and I took the stage of the Web Summit to discuss some of the concepts around smart auto-ambient computing. In the days leading up to it, it was a moment of reflection for me about how far we've come since my early days at NBC. The ideas we explored back then have evolved into a much more sophisticated ecosystem for what has been built today far surpassing what I thought possible.

To illustrate this evolution we presented a video where Peter McNulty and I were en route via a car to the Web Summit showcasing some ambient computing features of the car in action.

Proactive Assistance: While running late, his assistant automatically prompted him to reroute his journey optimizing for time without any manual input.

Contextual Information Delivery: The assistant pulled relevant information from Peter's email helping him by providing directions to specific locations within the website venue for our speaking engagement.

Multimodal transitions: As we approached the venue, the directions smoothly transitioned from the car's navigation to walking directions on his phone.

Adaptive Guidance: The phone then guided us through check-in and then to the stage adjusting instructions based on our location and the event schedule.

As you can see in this video it captured how ambient computing creates a fluid context where experience fans different devices and interaction modes it's not just individual smart devices it's more like crafting an ecosystem that anticipates needs and guides users through complex scenarios with ease.

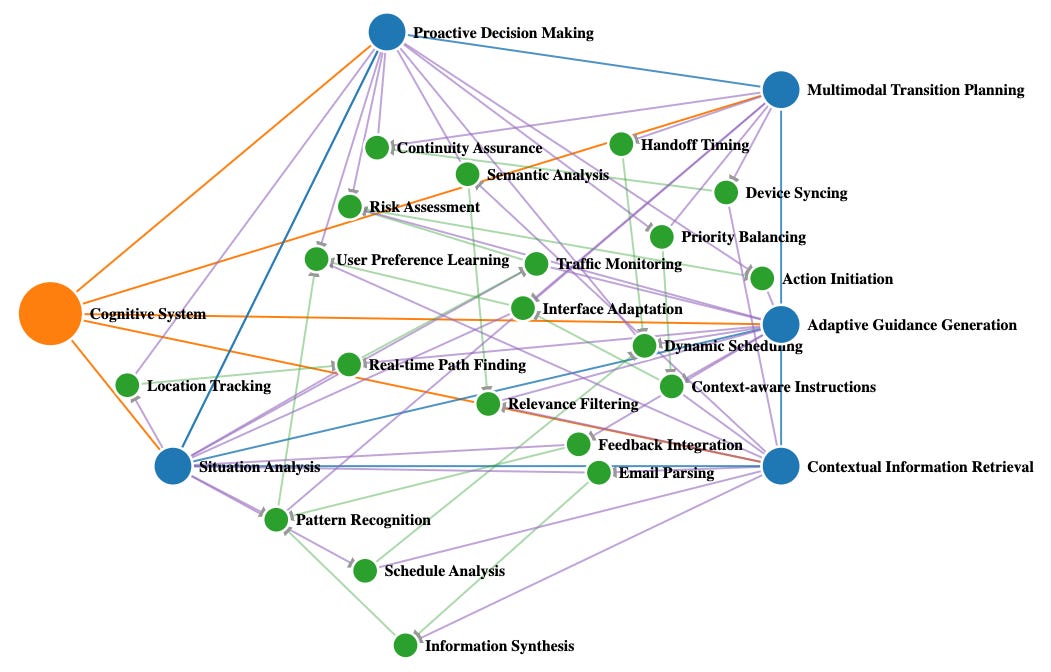

While that video captures the essence of ease of use and fluidity, it’s easier to demonstrate the orchestration of interconnected cognitive jobs being proactively executed behind the scenes as shown in the graph below.

The Evolution of Interface Design

The journey from those experimental days at NBC to today's ambient computing mirrors some of the broader evolution of interface design. At my old agency, we were recently tasked with designing some cabin experience use cases for a major automotive company’s mobility unit. Similar to the above video, the project encapsulated how we had to adapt our approach interface design in the age of ambient computing.

The project pushed us to think well beyond traditional UI paradigms. We weren't just designing for screens, we were choreographing experiences that transitioned seamlessly between the user's phone, audio interfaces, gestures, heads-up display, external robotic accessories, the vehicle’s smart glass interface, and haptic controls. As you can imagine there are a lot of touch points when you are inside or around a car that is also a high-performance computer running machine learning models using hybrid cloud/device AI computing architecture. The bounds of possibility significantly open up. We reconsidered how information and controls could shift context, how smart glass could provide augmented reality overlays, and how different interaction modalities could all work in harmony.

Rethinking User Interaction

At Web Summit we introduced our concept of Cognitive Design (read the whitepaper) which is a paradigm shift in designing for user interactions, in the age of ambient computing. It goes well beyond traditional design thinking by focusing on the mental processes that underpin the interactions that we have with technology.

Understanding Cognitive Jobs

At the center of Cognitive Design is the idea of “cognitive jobs” which are the mental tasks and processes that the users engage in when interacting with different types of technology. These jobs are often behind the scenes in the user's mind and aren't always visible in their actions. For example, you may be using your navigation app, but the visible job might be getting directions to the destination. Cognitive jobs could include:

Recalling the exact address of the location

Estimating the amount of time based on traffic

Deciding between different routes

Remembering personal preferences like avoiding specific highways

By designing around these cognitive jobs we can better create interfaces that feel intuitive and more aligned with users’ thought processes.

Principles of Cognitive Design

Mental Model Alignment: Designing interfaces that match the user's mental models of how the system should work.

Cognitive Load Reduction: Minimizing the mental effort required to use a system by offloading key cognitive function tasks to AI.

Contextual Adaptation: Creating systems that understand and adapt to user’s current cognitive state and the user’s environment.

Predictive Assistance: Anticipating the user's needs based on cognitive patterns and providing proactive support.

Designing for Multiple Modalities

With ambient computing we're not just designing for single interfaces or devices, we're creating experiences that span multiple modalities including visual, auditory, tactile, gestural, and more. Each modality supports different types of cognitive jobs often working together to create a seamless experience for the user.

Bridging Cognitive Jobs Across Modalities

Visual Modality: This supports pattern recognition spatial understanding a quick information scanning. Some examples are AR overlays and Smart Glasses for navigation.

Auditory Modality: An aid for attention direction, memory enforcement, and multitasking. For example, voice alerts for turns while driving.

Tactile Modality: This provides immediate attention-grabbing and nonverbal cues for important conveyance. An example is a steering wheel that vibrates when a car veers slightly towards another lane.

Gestural Modality: Enables intuitive control and spatial interactions. For example, hand gestures to manipulate 3D visual interfaces in an augmented or virtual reality environment.

Cognitive Design in Practice

To illustrate how Cognitive Design and multi-modal interactions work in practice consider how it might work in a smart home environment:

Morning Routine Optimization: Involves cognitive tasks like prioritizing tasks and adjusting for environments. Some modalities include visual (for subtle lighting changes), auditory (progressive gentle wake-up sounds), and tactile (smart bed adjustments).

Smart kitchen Assistance: Meal planning and cooking optimization using cognitive jobs that include managing recipes and tracking ingredients. The modalities may involve visual (displaying AR recipes), auditory (voice-controlled instructions), and tactile (interacting with smart appliances).

Work-from-home Productivity: Focuses on maintaining concentration and enabling collaborative communication. Modalities may include visual (adaptive lighting), auditory (noise canceling adjustments), and gestural (hand movements for various virtual workspaces).

Health and Wellness Monitoring: A focus on self-awareness and habit-forming, including visual (health metrics display), auditory (reminders), and tactile (nudges for postural correction).

Evening Relaxation and Planning: This involves stress reduction in next-day preparation including modalities of visual calm lighting, auditory soothing music, and voice interaction.

In designing for these cognitive jobs across these different modalities, we create environments that intuitively support our daily activities, thus reducing cognitive load and enhancing our well-being and productivity.

Enabling Ambient Intelligence With Ethical Considerations

None of this would be possible without new major advancements in AI/ML which is the central nervous system of ambient computing thus enabling software to understand context, predict our intentions, and seamlessly orchestrate between different modalities.

Imagine AI that can use real-time causal analysis from thousands of data points around you to predict when you're about to hit a productivity slump and adjust your environment for productivity; understand and contextualize all of the work that you're doing, and help you by pulling up relevant resources proactively as just as you need it; learn your preferences and anticipate your needs and surface the best options for modality as you conduct the task.

As we push these boundaries of what's possible we're going to have to address some very complex ethical questions. Privacy, consent, and inclusivity are not just afterthoughts they're going to be fundamental principles that designers are going to need to integrate into every aspect of our work. But like in the example above, we also need to consider how we are impacting the end user as we may just create AI that is accidentally mentally abusive and manipulative that while working proactively is conditioning humans for unhealthy behavioral patterns while trying to accomplish the cognitive job that it is supporting.

Moving forward designers should keep this in mind, “Just because we can, doesn’t mean that we should.”

So that we can design AI experiences and systems that have human interests squarely at the center we all need to commit to principles such as:

We are being transparent about data collection in usage

We are respecting user privacy and autonomy

We are ensuring inclusivity and accessibility to all users

We are building resistance to misuse or manipulation

We are providing clear reasons for decision-making

These simply cannot be afterthoughts. So that we can create ambient computing environments that are not only powerful and intuitive but also trustworthy and ethical, designers need to follow at least these foundational principles and integrate them into every aspect of our work.

Cognitive Design Techniques

Cognitive Interviews: Deep questioning sessions to map users' thought processes, probing into mental framing, step-by-step reasoning, and decision-making strategies.

Concept Mapping: Users create visual diagrams of key concepts and relationships, revealing their mental models and knowledge structures within a domain.

Behavioral Experiments: Carefully designed tasks that map to real-world Cognitive Jobs, modulating factors like information volume and conceptual difficulty to assess cognitive performance.

Emotion Detection: Utilizes inputs like voice, text, or facial expressions to identify users' emotions, uncovering affective states related to cognitive processes.

Cognitive Walkthroughs: Users vocalize their thoughts while completing tasks, revealing invisible thought processes and critical decision points in their cognitive workflow.

Longitudinal Studies: Extended research that follows users over weeks, months, or years, tracking the evolution of Cognitive Jobs as users' expertise grows over time.

Interactive Feedback Loops: Ongoing cycles of user input, AI output, and user feedback, enabling collaborative assistance with humans in the loop while refining AI responses.

Human-AI Assistance Testing: Observes users collaborating with prototype AI systems to identify gaps in capability and cognition, revealing where human-AI coordination breaks down.

Cognitive User Testing: Users complete tasks using think-aloud protocols, allowing researchers to assess problem-solving approaches, misconceptions, and cognitive friction points.

Empathy Interviews: Open-ended discussions focused on users' thoughts, feelings, hopes, and fears concerning AI augmentation, uncovering emotional and social needs related to cognitive assistance.

Long-Term In Situ Testing: Deploys AI prototypes in real environments for extended periods, tracking changes in Cognitive Jobs as users' knowledge and interaction with the AI system evolves.

The Role of Designers is Evolving

The role of the design has adapted as new technologies and capabilities have evolved. In this new environment, research and strategic thinking will be more paramount due to the abstract nature of seamless handoffs between visual, physical, and the invisible. We’re no longer creating linear task-based interfaces, but now blending experiences that seamlessly flow across multiple dimensions of our daily lives.

Designers in this new ambient design era will need to:

System Thinkers: Have the ability to understand and design across abstract dimensions

Ethicists: Have a strong sense of morality and see the implications of this pervasive technology

Futurists: Have the ability to anticipate and design the impact of emerging intelligence technologies

Collaborators: Have capabilities of working effectively across new disciplines and stakeholder groups

The Invisible Revolution

Reflecting on this journey from the early broadband experiments to tomorrow's promise of ambient computing, I’m excited for what's to come next. The future of designing for interfaces is being more ambient and will no longer rely on scripted workflow, but instead fluidity of actions and thoughts. It weaves technology seamlessly across the fabric of our lives so that it becomes an invisible yet indispensable part of our daily routines.

Yet, it's important for us not to forget that at the heart of these technological breakthroughs is the human experience. It's our job as designers to enhance human potential by making lives easier, more productive, and ultimately more fulfilling. While we do this, we do it with ethics and responsibility enhancing human potential in ways we are only beginning to imagine. It's up to us to shape this new era of seamless living into a future that truly serves humanity in the best ways possible.

It's been an incredible journey from my early days to now and have a feeling that the most exciting chapters have yet to be written.